The goal of the course is to teach students about the rise of Non-Volatile Memory (NVM) storage technologies in commodity computing, their impact on system design (architecture, operating system), distributed systems, storage services, and application designs.

Syllabus

What's new

- End-May, 2024: All lecture videos should be online. Please stay tuned.

- May 8th, 2024: The website is updated.

Storage System is a unique course due to its sole focus on NVM storage and its impact on research and education. Slow storage has been the Achilles’ heel in building fast and efficient systems. However, in the last 10-15 years we have witnessed the rise of Non-Volatile Memory (NVM) storage technologies in commodity computing. These technologies such as NAND Flash and Optane offer multiple orders of magnitude performance improvements over hard-disk drives (HDD). This advancement represents one of the most fundamental shifts in storage technology since the emergence of the HDD in the 1960s. The objective of this course is to educate students about the impact and implications of NVM storage on system design (architecture, operating system), distributed systems, storage services, and application designs.

We take inspiration from the 2018 Data Storage Research Vision 2025 report (https://dl.acm.org/doi/book/10.5555/3316807) which identifies (see section 6.1):

"Many students may only associate storage systems with hard disk drives or a specific file system, which is obviously less attractive compared to, say, self-driving cars. This situation is partly due to the fact that there is no clearly defined course on storage systems in the majority of universities."

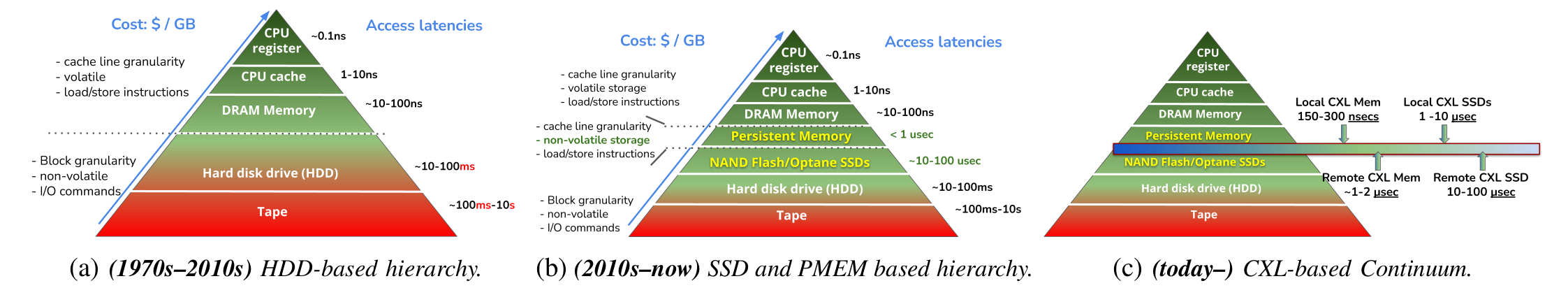

Evolution of the Storage-Memory hierarchy from (a) HDD-driven hierarchy; (b) to SSD and PMEM-based storage hierarchy; to (c) modern CXL-based Storage-Memory Continuum.

CCGrid 2024 Paper and Slides

We have published our experience in setting up this course at the 24th IEEE/ACM international Symposium on Cluster, Cloud and Internet Computing (CCGrid'24). Philadelphia, May 6-9, 2024, https://2024.ccgrid-conference.org/program/. Please cite the following paper when referencing this material.

Animesh Trivedi, Matthijs Jansen, Krijn Doekemeijer, Sacheendra Talluri, and Nick Tehrany. Reviving Storage Systems Education in the 21st Century — An experience report. In the Proceedings of the 24th IEEE/ACM international Symposium on Cluster, Cloud and Internet Computing (CCGrid), Philadelphia, pages 616--625, DOI: 10.1109/CCGrid59990.2024.00074, May 6-9, 2024.

The paper is available online at:

The conference talk is available online at: https://docs.google.com/presentation/d/1XcGmP2kTrY-OW563licFKnbG05_p-jiU8E3eGHVkFY4/edit?usp=sharing

Many thanks to Jesse Donkervliet, who presented this work on the behalf of the authors at CCGrid 2024.

Lecture content and Slides

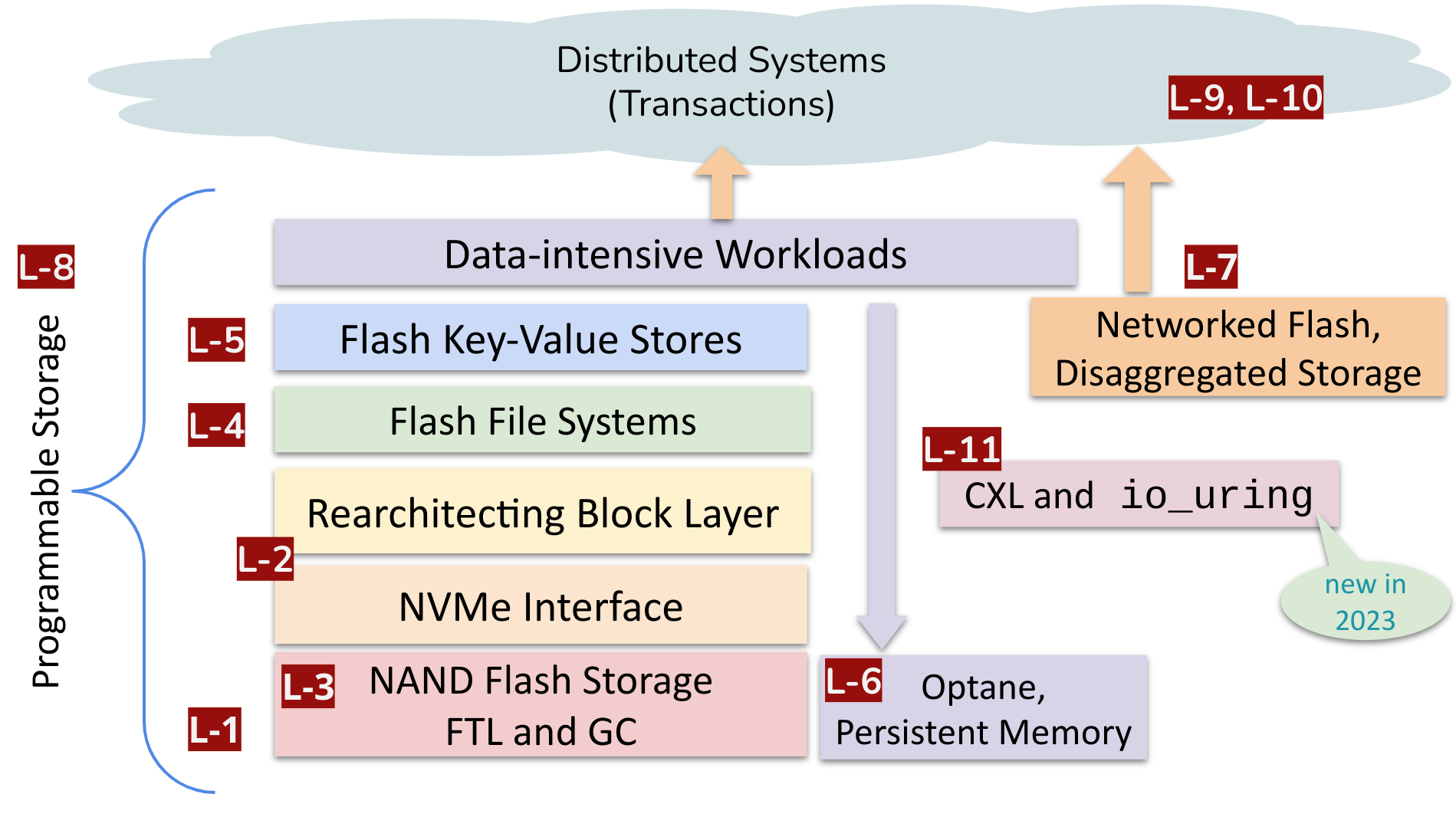

Lectures layout (L1-L11) in Storage Systems course.

Storage Systems (VU catalogue number XM_0092) is a MSc-level course that is first established and offered in 2020. The course covers the rise of Non-Volatile Memory (NVM) storage technologies in commodity computing, their impact on system design (architecture, operating system), distributed systems, storage services, application designs, and emerging trends (CXL and io_uring). We cover the following topics in 2023:

Introduction to NVM Storage: We introduce the historical context, HDDs, NAND/NOR/XOR flash cells and chips, media-level differences between SSDs and HDDs, SSD packaging (dies, blocks, pages, internal organization), operations (I/O, GC, and erase), and performance and endurance properties. We highlight that the storage design guidelines and tradeoffs have changed with SSDs and the new triangle of Storage Hierarchy.

Host Interfacing and Software Implications: We discuss how 2-3 orders-of-magnitude performance improvements of SSDs with high parallelism necessitated the development of a new host controller (NVM Express, NVMe) and the re-design of the Linux block layer (multi-queue block layer design, polling driven architecture).

Flash FTL and Garbage Collection: We introduce the concept of the FTL and its responsibilities for active flash chips management (built on L-1), designs in the garbage collection (GC) algorithms. We then discuss why the host software (file systems, data stores) need to be aware of the FTL design, GC operations, and trade-offs captured as the SSD Unwritten Contracts. We then look at SSD-managed, and host-managed FTL designs. A host-managed FTL design is used for their practical assignment.

Flash File Systems: We discuss how SSD internal properties and FTL designs (Lectures 2 and 3) prefer sequential writes, which can be generated by log-structured file systems (LFS). We analyze LFS designs, GC, their optimizations for flash SSDs (F2FS, SFS file systems), and novel FS designs with software-defined flash such as Direct File System (DFS) and Nameless Writes.

Flash Key-Value Stores: We introduce important lookup data structures (B+ tree, hash table, LSM tree) and how unique properties of flash storage (asymmetrical read/write, and high parallelism) require these structures to consider their application-level read/write amplifications and space requirements (the RUM Conjecture). We cover research projects such as LOCS, WiscKey, uTree, SILK.

Byte-addressable Persistent Memories: We discuss performance characterization of Intel Optane persistent memory, its impact on building novel abstractions like persistent data structures (NVHeap, PMDK) and even novel OS designs (Twizzler), thus blurring the difference between a file and memory.

Networked and Disaggregated Flash: We draw an analogy with Lectures-1 and 2 and establish that fast storage requires fast networks and co-designed network-storage protocols such as NVMe-over-Fabrics (NVMoF). We discuss the concept of storage/flash disaggregation and various block-level, file system-level, and application-level (RDMA) access to remote storage (FlashNet).

Programmable and Computational Storage Devices (CSDs): We introduce the problem of the data movement wall and the opportunity with SSDs that have an active programmable element, the FTL. Hence, a user can run its data processing program close to storage, inside an SSD with the FTL, thus enabling Computational or Programmable storage. We discuss its origins (Active Stroage, Intelligent Disks), modern interpretations (Willow, Biscuit, INSIDER), various hardware/software design options that offer performance and efficiency via specialization, and the recent efforts to standardize it.

NVM Impact on Data Processing Systems: We identify the opportunity with fast storage and networks (L-7) that help distributed data processing frameworks to efficiently manage their runtime state and data exchange operations (NodeKernel and Crail). We then discuss how data storage formats become a bottleneck due to their HDD-era design assumptions, such as “the CPU is fast and I/O is slow” (Albis).

NVM Impact on Distributed Transactions: We link the write-once property of flash chips with the design of a transaction system and discuss networked flash-based (L-7) Corfu and Tango transaction systems. We discuss the historical context in which such systems were built, and what unique properties flash storage offers to realize these systems now (but not before).

Emerging Topics: In this lecture (new in the 2023 edition), we consider how NVM storage connects to wider hardware trends (CXL) and necessitates development of a new software I/O API (io_uring). CXL connects all storage and memory elements in a byte-addressable, coherent manner, thus giving rise to a new “Storage-Memory Continuum” with a highly granular performance spectrum instead of the classical triangle of cache-memory-storage hierarchy. Later, we discuss the emergence of io_uring, a new asynchronous high-performance I/O API in Linux and its design [69], [70]. These developments led to a re-division of labor among hardware-software-OS in a storage system.

Practical Work

To facilitate “learning-by-doing”, in the assignment work the students design and implement an FTL for an NVMe SSD with Zone Namespaces (ZNS) in QEMU and build a workload-specific file system with RocksDB. There are five milestone in the practical work:

- A new device is in town - The students become familiar with the Linux development environment, framework (QEMU, NVMe, ZNS), and tools using state-of-the-practice Linux nvme-cli tools. They need to set up a QEMU VM with ZNS and NVMe devices, read the NVMe 1.4 specification with ZNS Technial Proposal (TP-4053), and test NVMe commands (with nvm-cli) to interact with the NVMe and ZNS devices.

- I can’t read, is there a translator here? - Implement a host-side hybrid log-data FTL in the userspace (using libnvme). The idea here is to convert a ZNS SSD (sequential writes, zones, resets) into a conventional SSD (read, write anywhere). For the hybrid FTL, the log segments are page-mapped, while the data-segments are zone-mapped. No GC is expected at this stage.

- It’s 2023, we recycle - Enable GC in the hybrid FTL where pages from the logs are moved and mapped into zone-mapped segment areas. You need to decide how to identify and keep track of live, dead pages and segments and how to keep mappings consistent with concurrent I/O.

- We love Rock(sDB) ‘n’ Roll! - the students familiarize themselves with RocksDB, and design and implement a RocksDB-specific file system (domain-specialized) on top of their FTL-ZNS device (on top of the 3rd assignment). We offer a free design space where the students can develop their own file system design.

- Wake up, Neo - The last assignment is to make the whole project durable and consistent with an orderly shutdown and restart, and pass the RocksDB persistency tests. The students can take additional bonuses to demonstrate the completeness of their code by running it on real NVMe ZNS SSDs donated by Western Digital.

The project handbook is publicly available here:

Source Code

- Framework for the assignment: https://github.com/atlarge-research/ (to be released, expected end-May, 2024)

- Framework for automated grading of assignments: https://github.com/atlarge-research/ (to be released, expected end-May, 2024)

Source code released under the MIT Licence.

Content License

This course content are distributed under the Creative Commons Attribution 4.0 International (CC BY 4.0): https://creativecommons.org/licenses/by/4.0/.

Feel free to modify and use the slides in your course as you see fit with attribution.

Editable version of slides are available at: https://drive.google.com/drive/folders/1K165plw4xDHZFZHeuhMvpqgEP8MaRBlT?usp=sharing

Contact

- Animesh Trivedi (firstname.lastname @ gmail) -- corresponding author

- Krijn Doekemeijer (k.doekemeijer @ VU DOT NL)

- Zebin Ren (z.ren @ VU DOT NL)

- Matthijs Jansen (m.jansen @ VU DOT NL) - for automated grading framework-related questions

Acknowledgements

This work is supported by generous hardware donations from Western Digital arranged by Matias Bjørling. This work is partially funded by Netherlands-funded projects from the Dutch Research Council (NWO) grants (OCENW.KLEIN.561 and OCENW.KLEIN.209) and GFP 6G FNS, and the EU-funded projects MCSA-RISE Cloudstars and Horizon GraphMassivizer, and the VU Amsterdam PhD innovation program.

Course Code

XM_0092